Apple to Scan iPhones for Evidence of Child Abuse Starting with iOS 15

Apple this week announced plans to expand protections for children, giving itself the ability to scan user photos for evidence of Child Sexual Abuse Material (CSAM).

“At Apple, our goal is to create technology that empowers people and enriches their lives — while helping them stay safe,” the company says on a new page on its website dedicated to child protections.

“We want to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material (CSAM),” it adds.

Technology developed in collaboration with child safety experts and used in iOS and iPadOS will allow the iPhone maker to detect known CSAM images stored in iCloud Photos.

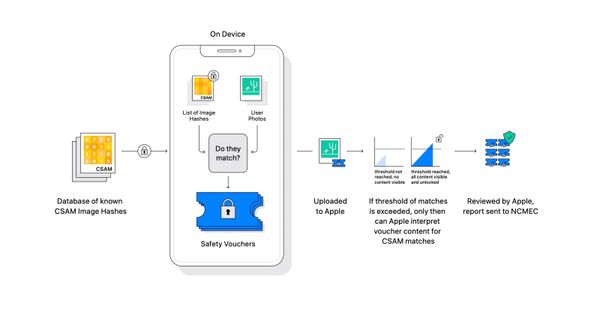

Instead of scanning images in the cloud, Apple will perform on-device matching using a database of known CSAM image hashes provided by the National Center for Missing and Exploited Children (NCMEC), and other child safety organizations. The database is essentially an unreadable set of hashes stored on users’ devices.

The technology is detailed in two technical papers (1,2) the company published this week. However, Apple also offers a description of the CSAM detection feature in plain English:

“Before an image is stored in iCloud Photos, an on-device matching process is performed for that image against the known CSAM hashes. This matching process is powered by a cryptographic technology called private set intersection, which determines if there is a match without revealing the result. The device creates a cryptographic safety voucher that encodes the match result along with additional encrypted data about the image. This voucher is uploaded to iCloud Photos along with the image.”

Then, using something called “threshold secret sharing,” the system ensures the scanned images cannot be interpreted by Apple unless the iCloud Photos account crosses a threshold of known CSAM content. Apple claims the threshold provides “an extremely high level of accuracy” - less than a one in 1 trillion chance per year of incorrectly flagging a given account.

If the threshold is exceeded, the account and its photos undergo manual review by Apple employees. If there is a match, Apple disables the user’s account and sends a report to NCMEC. Users can file an appeal to have their account reinstated if they feel they’ve been mistakenly flagged.

The company says the technology provides significant privacy benefits over existing techniques since Apple only learns about users’ photos if they have a collection of known CSAM in their iCloud Photos account.

The feature is set to be enabled in iOS 15 and iPadOS 15 later this year.

Privacy experts are concerned that Apple’s move will open the door to mass surveillance by governments around the globe.

The BBC asked Matthew Green, a security researcher at Johns Hopkins University, to offer his take on the matter.

"Regardless of what Apple's long term plans are, they've sent a very clear signal. In their (very influential) opinion, it is safe to build systems that scan users' phones for prohibited content," Green said. "Whether they turn out to be right or wrong on that point hardly matters. This will break the dam — governments will demand it from everyone."

And some fear that, if the feature is successful, the next move might be to start scanning user accounts for material involving drug use, pirated content, etc.

tags

Author

Filip has 15 years of experience in technology journalism. In recent years, he has turned his focus to cybersecurity in his role as Information Security Analyst at Bitdefender.

View all postsRight now Top posts

Fake Download of Mission: Impossible – The Final Reckoning Movie Deploys Lumma Stealer

May 23, 2025

Scammers Sell Access to Steam Accounts with All the Latest Games – It's a Trap!

May 16, 2025

How to Protect Your WhatsApp from Hackers and Scammers – 8 Key Settings and Best Practices

April 03, 2025

FOLLOW US ON SOCIAL MEDIA

You might also like

Bookmarks