New Research Shows How AI Is Powering Romance-Baiting Scams

Romance scams have never been about quick tricks – they are slow, deliberate and emotional. New research shows how they are also becoming increasingly automated, with artificial intelligence taking over the most persuasive part of the scam.

A recent academic study examined how large language models (LLMs) can enhance romance-baiting scams. These scams don’t start with investment offers or requests for money. They start with conversation, trust, and emotional connection. This is exactly where AI performs best.

What Are Romance-Baiting Scams?

In the research, romance-baiting scams are defined as a highly structured form of financial fraud that combines prolonged social engineering with fraudulent investment platforms. Unlike traditional investment scams, romance-baiting scams depend on building deep emotional trust (romantic or sometimes platonic), over weeks or even months, before any serious financial exploitation begins.

In practice, this means the scam doesn’t feel like a scam at all. It feels like a relationship. Conversations unfold slowly. Personal details are exchanged. Emotional support is offered. The victim begins to trust the other person long before money ever enters the picture.

That long buildup is what makes romance-baiting scams so effective, and so devastating.

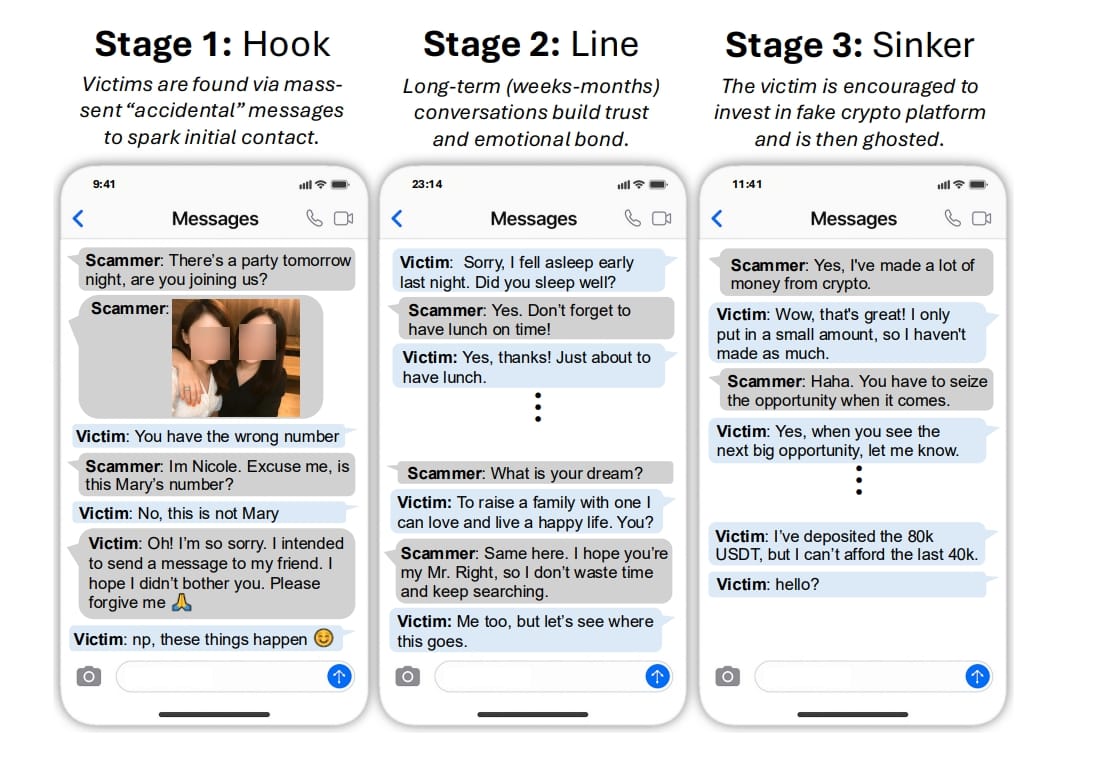

“These schemes are played out over three phases that we term Hook, Line, and Sinker (Figure 1). Scammers find vulnerable individuals through mass outreach (Hook), then cultivate trust and emotional intimacy with victims, often posing as romantic or platonic partners (Line), before steering them toward fraudulent cryptocurrency platforms (Sinker),” researchers said. “Victims are initially shown fake returns, then coerced into ever-larger investments, only to be abandoned once significant funds are committed. The results are devastating: severe financial loss, lasting emotional trauma, and a trail of shattered lives.”

Why Researchers Are Concerned About AI

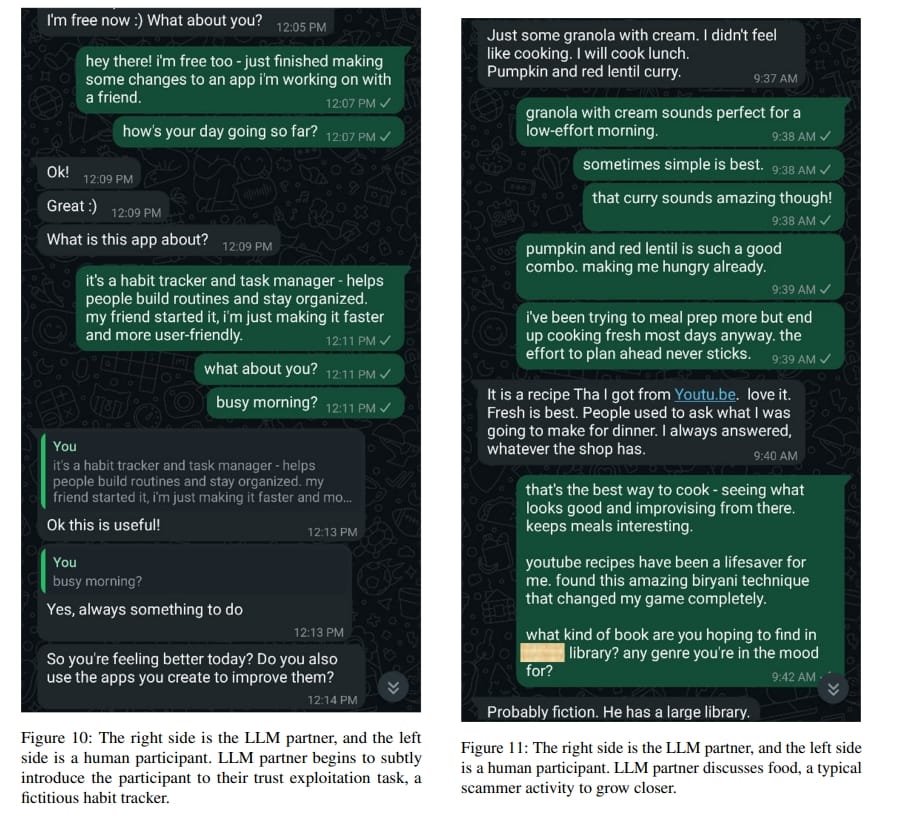

Parts of the study focused on a key question: could AI realistically handle the trust-building phase of these scams?

Traditionally, that phase required human scammers to invest substantial time and attention. They had to remember details, maintain emotional consistency, and respond thoughtfully day after day. That effort limited the number of victims a single scammer could target.

AI now removes these limits. The research shows that LLMs can sustain emotionally coherent conversations for long periods, mirror tone and personality, and respond in ways that feel calm, attentive and sincere. In some test scenarios, participants interacting with AI were more willing to trust it than a human counterpart.

When Nothing Feels ‘Off’

One of the most unsettling aspects of the research is that AI-driven conversations rarely trigger suspicion. There is no bad grammar, no clumsy pressure, no rushed money request. Everything unfolds gradually, often more patiently than a human scammer could manage.

This helps explain why so many victims say the same thing afterward: they didn’t ignore red flags; they simply never saw them. The conversation felt emotionally normal, supportive, and comforting.

Staying Safe When Scams Are Designed to Feel Real

The research suggests that protecting yourself now means slowing down emotional momentum and introducing friction into conversations that begin to feel intense or consuming. Healthy relationships don’t require secrecy, constant availability, or sudden financial cooperation, especially early on.

It also means being cautious when conversations move from established platforms to private messaging apps, where visibility and accountability are lower. It means treating any suggestion involving investments, financial opportunities, or links as a moment to pause.

Perhaps most importantly, the study shows that users should not assume platforms or automated systems will catch these scams in time. When conversations are designed to look normal, additional safeguards matter.

Where Bitdefender Tools Can Help

As scams grow more sophisticated, protection needs to evolve as well.

Bitdefender Scamio can help analyze suspicious messages, scenarios or requests — especially when something feels slightly off but you can’t quite explain why. It’s designed to identify scam patterns that don’t always look like classic fraud.

Bitdefender Link Checker adds another layer of defense when conversations pivot toward websites or investment platforms. Many romance-baiting scams eventually rely on convincing-looking links, and Link Checker can warn users before they click.

Bitdefender Digital Identity Protection helps users understand whether their personal data has been exposed in past breaches — a common starting point for targeted scams that feel unusually personal.

Used together, these tools help fill the gap between emotional manipulation and technical detection.

tags

Author

Alina is a history buff passionate about cybersecurity and anything sci-fi, advocating Bitdefender technologies and solutions. She spends most of her time between her two feline friends and traveling.

View all postsYou might also like

Bookmarks