They Wear Our Faces: How Scammers Are Using AI to Swindle American Families

This October, Bitdefender launched They Wear Our Faces, a national awareness campaign exposing how scammers exploit trust and relationships by using AI to deceive and impersonate.

The campaign highlights a growing crisis in the United States, where deepfake technology and personalized scams are rapidly dominating the criminal mainstream. By dramatizing how bad actors can clone voices, mimic faces, and impersonate loved ones, They Wear Our Faces challenges the stigma that victims are to blame — and opens the conversation about what protection really means in an age of AI-driven fraud.

Why This Matters

The United States has become the world’s primary hunting ground for scammers. The Federal Trade Commission (FTC) reports that Americans alone accounted for more than $12.5 billion in reported fraud losses in 2024, a dramatic increase from previous years.

Unlike most other countries, scams in the US use every possible channel—email, SMS, social media, and even seemingly legitimate digital ads. For American consumers, distinguishing between genuine offers and fraudulent pitches has never been more challenging.

What Bitdefender Telemetry Reveals

Bitdefender data confirms this. Between March and September 2025, the United States received nearly 37% of global spam, making it the world’s primary target. Within those emails, 45% of the global spam received by Americans was fraudulent or malicious. The leading threats flagged by Bitdefender Antispam Lab were scams, such as account and financial phishing, advance fee fraud, extortion attempts, and even dating scams. The most impersonated brands reflect the everyday services Americans rely on: Microsoft (18%), Costco (14%), Amazon (12%), American Express (10%), and DocuSign (10%) were among the top lures used to trick users into clicking malicious links or sharing sensitive data.

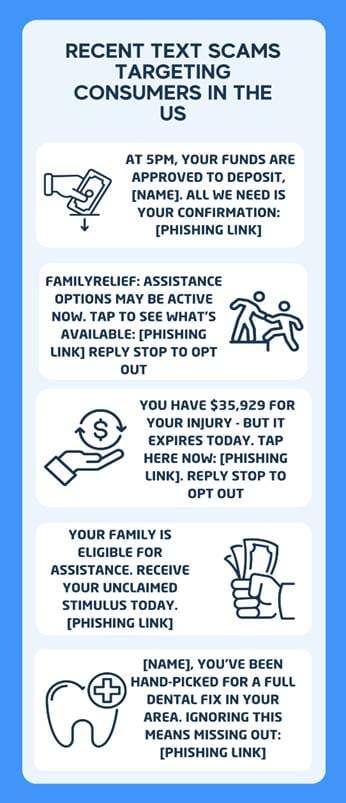

SMS Scams: A Growing Concern for Consumers in the United States

SMS scams are also on the rise. Bitdefender telemetry shows that 10% of US users received at least one SMS scam in the past two months. What makes SMS scams in the United States particularly dangerous is their advanced level of personalization and sophistication. While scams in Europe or Canada often rely on obvious brand impersonation and straightforward attempts to steal card details, campaigns in the United States frequently mimic legitimate aggressive marketing tactics so convincingly that even cautious users may struggle to tell the difference. Scammers use randomized domains, urgent calls to action, and even the recipient’s name in the text message to capture attention, blurring the line between a real promotion and attempted fraud. This heightened realism makes SMS scams targeting American consumers uniquely deceptive and harder to detect. The top topics flagged between August and September 2025 include:

- Finance (26%)

- Government (14%)

- Entertainment (11%)

- Healthcare (9%)

- Insurance (5%)

- Delivery (3%)

- Prize notifications (3%)

Examples include urgent notifications like:

By imitating official programs, financial institutions, or urgent prize alerts, these SMS scams blur the line between legitimate marketing and criminal manipulation, making them particularly hard to spot.

Beyond Email and SMS: Scam Campaigns Supercharged by AI

Bitdefender Labs has also tracked US-focused scam and malware campaigns leveraging AI and social engineering. Our Investigations have uncovered:

- Malvertising campaigns on Meta that distributed advanced crypto-stealing malware to Android users.

- Weaponized Facebook ads exploiting cryptocurrency brands in multi-stage malware campaigns.

- Sophisticated LinkedIn recruiting scams run by the Lazarus Group.

- Fake Facebook ad campaigns impersonating Bitwarden, combining brand abuse with malware delivery.

How AI Has Changed the Scam Business

Artificial intelligence has fundamentally reshaped the economics of scamming. What once required teams of fraudsters and weeks of preparation can now be executed in minutes with freely available tools. In short, AI makes scams faster, cheaper, and more convincing.

Recent incidents highlight just how dangerous AI-fueled scams have become:

- The FBI recently warned that scammers impersonated US officials using deepfake technology in coordinated scam campaigns.

- Threat actors have also used AI-generated medical experts in deepfake videos to push bogus treatments and healthcare scams, as reported by Bitdefender Labs.

- In Hong Kong, criminals used a deepfake video call of a CFO to trick a company into transferring more than $25 million to their accounts.

Bitdefender research has documented additional similar abuses, including deepfake videos of Donald Trump and Elon Musk in looped “podcast” formats, often promoting bogus crypto investments. Scammers also build on old “giveaway” scams, tricking victims into sending cryptocurrency or handing over personal data in exchange for fake rewards.

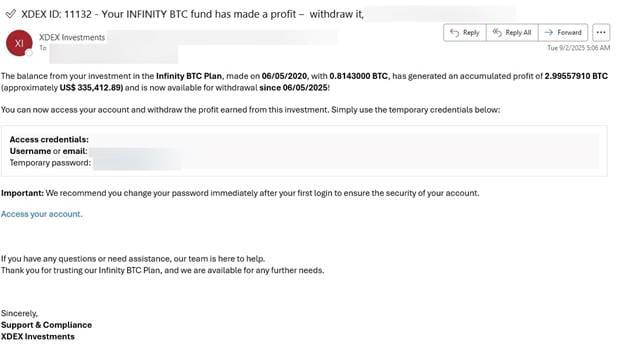

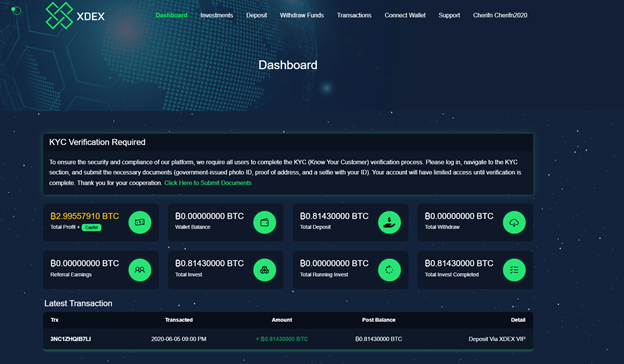

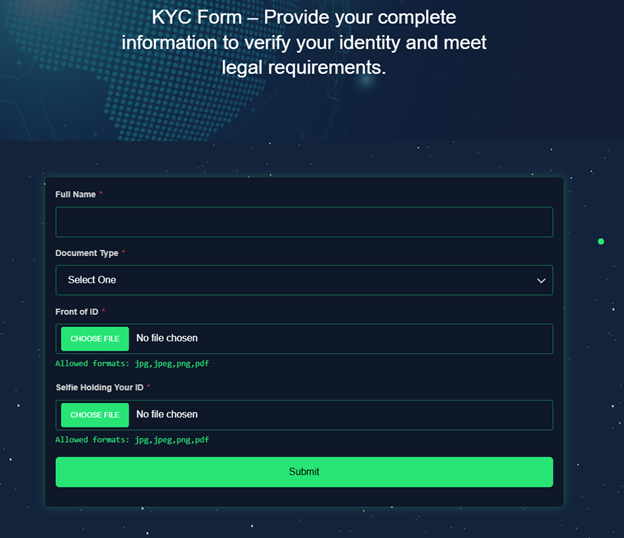

A recent case investigated by Bitdefender illustrates this risk: victims received an email from a fake investment service, XDEX Investments, claiming they had earned over 2.99 BTC in profits from an old “Infinity BTC Plan.” The fraudulent site lured users to log in with temporary credentials, prompting them to complete a KYC (Know Your Customer) form that demanded not only personal details but also a selfie while holding their government-issued ID.

This tactic is especially dangerous in the context of AI-enabled scams and deepfake impersonations. Once criminals obtain such biometric data — a high-quality photo of a face alongside official documents — it can be used to fuel impersonation scams, generate deepfakes, or bypass facial recognition systems. Essentially, scammers are tricking victims into providing the perfect raw material for the next generation of AI-driven fraud.

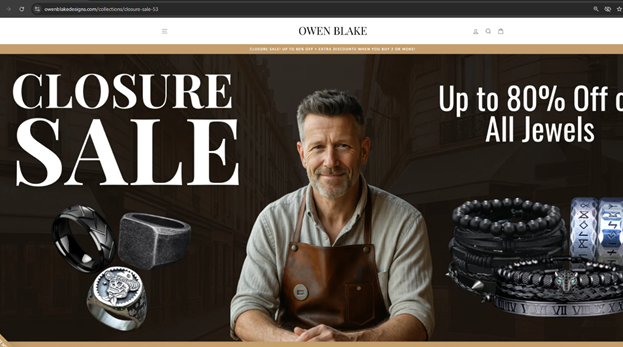

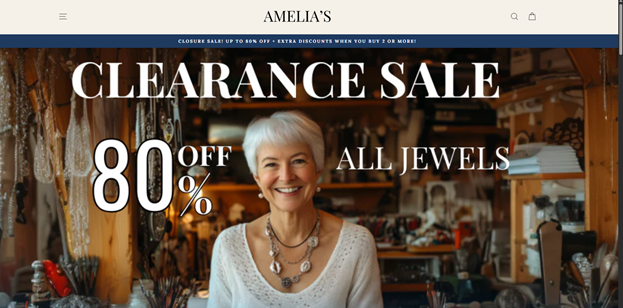

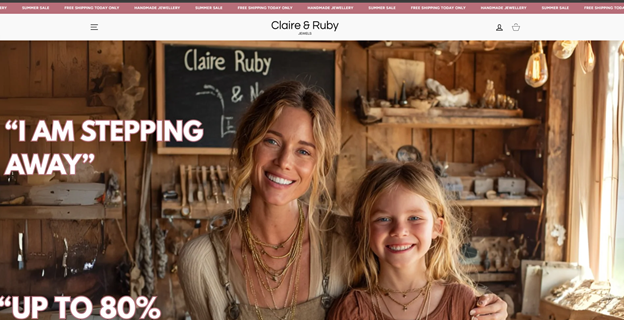

At the same time, other AI-powered scam trends continue to emerge. In the US, Bitdefender has flagged a scam campaign using AI-generated photos and emotional ad copy used in “closure sales,” where fake websites create urgency around disappearing deals. With AI, even non-English-speaking cybercriminals can effortlessly generate polished English content, making their scams more convincing than ever.

Here's an example of how easy it is to generate a deepfake video starting from just one screenshot:

They Wear Our Faces: From Mass Attacks to Personal Impersonation

The next phase of scams goes beyond mass attacks. Scammers are increasingly adopting highly personalized forms of impersonation, leveraging the very things that make us human—our faces, our voices, and our relationships.

Voice cloning scams now mimic the voices of children or relatives to demand urgent payments. AI-generated videos use stolen social media photos to craft convincing romance or investment pitches. In some cases, biometric data such as faces or voices are harvested from phishing campaigns to fuel future deepfake scams.

Emergency scams also show just how devastating this emotional manipulation can be. Victims may receive a call, email, or social media message from someone claiming to be a distressed family member who was allegedly arrested, hospitalized, or caught in some disaster. The scammer provides convincing details, such as names of relatives or school information, to build credibility.

One version targets parents of college students. The fraudster claims their child has been arrested and they need to send bail money, immediately, via Venmo or PayPal. To heighten the panic, they may even text a fake mugshot, warning that the student will be jailed alongside dangerous criminals unless the payment is sent immediately. Terrified parents comply, only to discover later that the story was fabricated.

Another common variation is the “grandparent scam,” where con artists contact older adults pretending to be a grandchild in urgent need of money. Sometimes the scheme is reversed, with the scammer posing as a grandparent pleading for help. In both cases, the plea is so persuasive that victims send money instantly, believing they are saving their loved one.

This is the reality behind Bitdefender’s They Wear Our Faces campaign: scams today don’t just steal money—they steal trust. And in a country where over half of adults still blame scam victims, this stigma only helps criminals thrive.

How to Spot a Deepfake: Quick Checklist for Families

Deepfakes are getting harder to detect, but most still leave subtle clues. If you notice one or more of these red flags, stop and verify before you trust what you see or hear:

- Lip-sync delay – The voice and lip movements don’t quite match, showing a slight lag or full mismatch.

- Audio vs. video mismatch – The sound doesn’t align with the visuals, or details seem off in either the audio or video.

- Strange eye movements – Blinking is too frequent or too rare, the gaze looks frozen, or the eyes shift unnaturally.

- Visual artifacts – Blurry edges, overly smooth skin, unnatural shadows/reflections, or background distortions.

- Unnatural voice – Robotic tone, odd pauses, irregular rhythm, strange accent, or exaggerated delivery.

- Poor quality – Background noise, small glitches, or interruptions in image and sound.

- Limited facial expressions – The face looks stiff or repetitive, struggling to show natural emotions.

- Detail inconsistencies – Jewelry, clothing, teeth, or accessories appear and disappear between frames.

Protecting Families in the Age of AI Scams

The good news is that families can take steps to stay safer:

- Pause and verify: Call back using a trusted number, not the one that contacted you.

- Agree on “safe words” within your family to confirm emergencies.

- Watch for a sense of urgency: Scammers rely on pressure and panic.

- Stay alert to deepfake clues: Look for mismatched audio, unnatural facial movements, or inconsistent details.

Technology can help, too. Bitdefender offers comprehensive security suites to protect families across multiple layers, stopping scams before they reach you. Whether it’s scam-detection in email, malicious link filtering, or AI-powered tools like Bitdefender Scamio and Bitdefender Link Checker, Bitdefender shields you from fraudulent content on platforms where scams spread most. For mobile users on Android, Bitdefender also includes call-blocking features that help prevent phone-based scams, such as robocalls and impersonation attempts, which are a common entry point for fraudsters targeting families in the US.

With the Bitdefender family plans, households can cover all devices under one umbrella, combining privacy, identity monitoring, and scam protection in a single, easy-to-manage suite.

Because today, scam protection is no longer just about stopping viruses — it’s about safeguarding trust.

Start your 30-day free trial today!

tags

Author

Alina is a history buff passionate about cybersecurity and anything sci-fi, advocating Bitdefender technologies and solutions. She spends most of her time between her two feline friends and traveling.

View all postsYou might also like

Bookmarks