Tesla reverses "Full self-driving" beta update after sudden braking reports

This weekend Tesla rolled back an over-the-air "Full Self-Driving" beta update it had sent to some drivers' cars, after reports that a software bug could cause vehicles to emergency brake unexpectedly, or experience phantom forward-collision alerts.

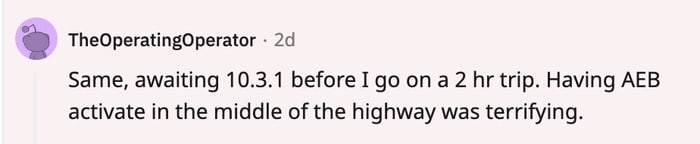

One driver posted on Reddit that having automatic emergency braking (AEB) activate suddenly while driving on the highway had been "terrifying".

AEB is a safety feature that is designed to detect objects that the car may hit and automatically apply the brakes. One Twitter user quipped that the random AEB events were designed to "keep users alert."

But joking aside, if a car suddenly and unexpectedly brakes while traveling at high speed on the highway, or in dense traffic, then that raises obvious safety issues. For instance, if your Tesla suddenly slammed on its brakes, you could find yourself shunted in the rear by following traffic.

The software update, which had been pushed out as a public beta to thousands of Tesla owners across the United States judged to have a good "safety score" for their past driving, should have enhanced Tesla's "Full Self-Driving" feature, which is currently in beta.

But in truth it introduced danger.

Yes, Tesla did mark the feature as a "beta," but it's no surprise that many eager Tesla drivers would be extremely keen to try it out, and as such put themselves and others at risk.

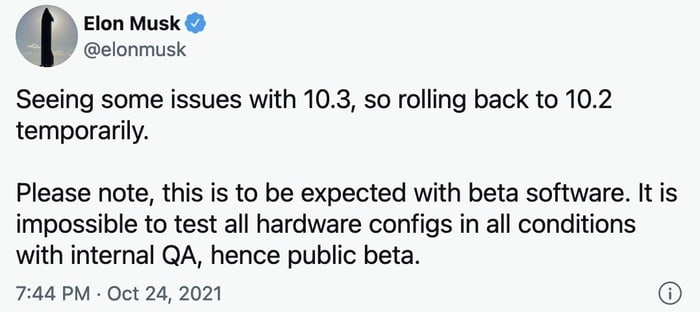

On Sunday, less than a day after it was released, Tesla pulled back the beta update. Tesla chief Elon Musk admitted in a Twitter post that there were "some issues" with the update but that they should be "expected with beta software" as it was "impossible to test all hardware configs in all conditions with internal QA."

Telsa drivers who are publicly beta-testing their car's self-driving capability in the real-world are effectively guinea pigs.

But whereas running beta software on your computer typically puts only your system and data at risk, beta-testing a self-driving car on a public road network introduces danger to other drivers who were never given the opportunity to opt-in or opt-out.

I think if I were a Tesla owner I would allow others to beta-test new software for a good amount of time before enabling it myself, to reduce the chances of my safety being put at risk. And maybe I'll choose to stay a little further away from other Teslas on the road too...

tags

Author

Graham Cluley is an award-winning security blogger, researcher and public speaker. He has been working in the computer security industry since the early 1990s.

View all postsRight now Top posts

Start Cyber Resilience and Don’t Be an April Fool This Spring and Beyond

April 01, 2024

Spam trends of the week: Cybercrooks phish for QuickBooks, American Express and banking accounts

November 28, 2023

FOLLOW US ON SOCIAL MEDIA

You might also like

Bookmarks