UK’s ICO Starts Investigating Grok over Non-Consensual Manipulation of Images

The United Kingdom’s data protection authority, the Information Commissioner’s Office (ICO), has opened a formal investigation into X (formerly Twitter) over image manipulation and the use of personal data in connection with the AI system Grok.

The probe follows widespread reports that the AI chatbot was used to generate harmful and explicit imagery.

The ICO’s action comes amid mounting international scrutiny of Grok’s image-generation capabilities, which critics say have been exploited to produce “deepfakes” and other visuals manipulated without individuals’ consent.

Such uses raise serious concerns under UK data protection laws, particularly regarding whether personal data was processed lawfully, fairly, and transparently, and whether robust safeguards were in place during the development and deployment of the technology.

‘Deeply troubling questions’

According to its statement, the ICO will investigate:

- How personal data has been used in Grok’s AI models

- Whether consent and safeguards were implemented to prevent harmful outputs

- If X Internet Unlimited Company (XIUC) and X.AI LLC (X.AI) complied with obligations under GDPR and the UK’s Data Protection Act

The regulator emphasized that failures in appropriate protections could leave people vulnerable to serious, immediate harm — particularly when children are involved.

William Malcolm, the ICO’s executive director of Regulatory Risk and Innovation, commented that the reports raise “deeply troubling questions” about the handling of personal data and the extent to which individuals may have lost control over their own information.

A broader regulatory backdrop

The ICO’s investigation builds on earlier engagements with Grok’s operators. On January 7, 2026, the watchdog publicly confirmed it had contacted XIUC and X.AI to urgently request information about reported issues with Grok’s outputs and the data governance measures supporting them.

This action also runs alongside a separate probe by the UK’s online safety regulator, Ofcom, which is examining whether the platform has complied with the Online Safety Act — including obligations to protect users from illegal or harmful content, such as non-consensual intimate images.

Regulators in the European Union and other jurisdictions have also begun their own reviews into Grok’s functionality and potential harms, sparking a wave of concern about AI content governance.

Read: EU Opens Probe into Grok Image Manipulation and X’s Recommender System

Most recently, the Paris prosecutor's office (Parquet de Paris) are investigating alleged algorithmic manipulation by foreign powers.

Consumers fear AI-created materials

The ICO’s investigation underscores a growing regulatory focus on AI accountability, especially around generative models that can manipulate or create fabricated representations of real people.

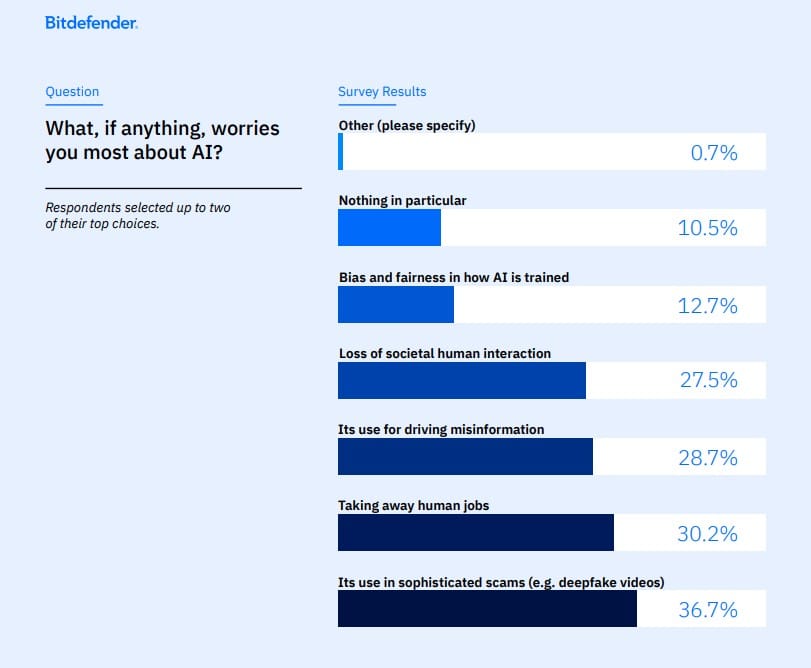

Consumers are becoming increasingly aware of the risks of AI-generated content. According to the Bitdefender 2025 Consumer Cybersecurity Survey, more than a third of respondents named the creation of deepfake videos one of their biggest concerns.

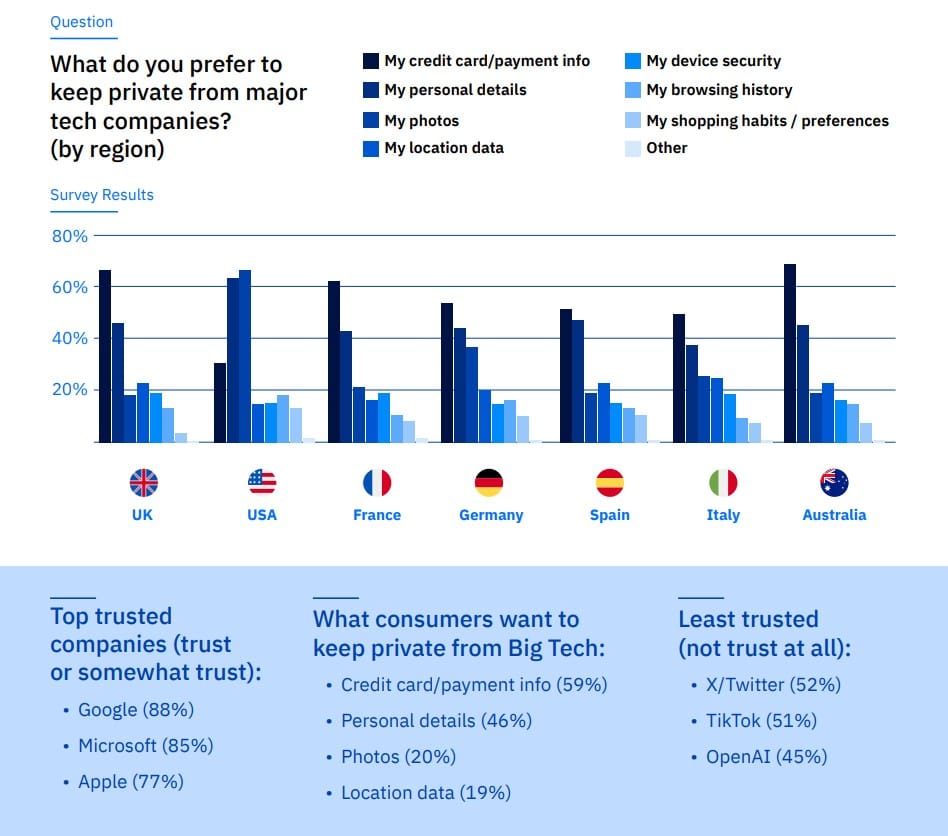

Trust in ‘Big Tech’ often differs by geography. Europeans tend to be stricter about safeguarding personal details, shaped by years of GDPR-driven awareness. While American consumers focus on convenience, Europeans are more attuned to privacy.

In the context of mounting scrutiny of X, it’s worth noting that participants in our survey – conducted from June to September 2025 polling more than 7,000 internet users across seven countries – expressed skepticism regarding X’s conduit on privacy and security, with 52% naming it a “least trusted” player.

As European regulators assess whether the necessary safeguards were put in place to prevent misuse of Grok’s image-manipulation capabilities, companies offering similar AI technologies will likely face mounting pressure to implement strong guardrails.

You may also want to read:

Apple Taps Google’s Gemini to Power Siri, Says Privacy Remains a Priority

Europe Slaps Tech Sector with €1.2 Billion in Fines under GDPR in 2025

Europe Fines X €120 Million in First Enforcement of the Digital Services Act

tags

Author

Filip has 17 years of experience in technology journalism. In recent years, he has focused on cybersecurity in his role as a Security Analyst at Bitdefender.

View all postsYou might also like

Bookmarks